|

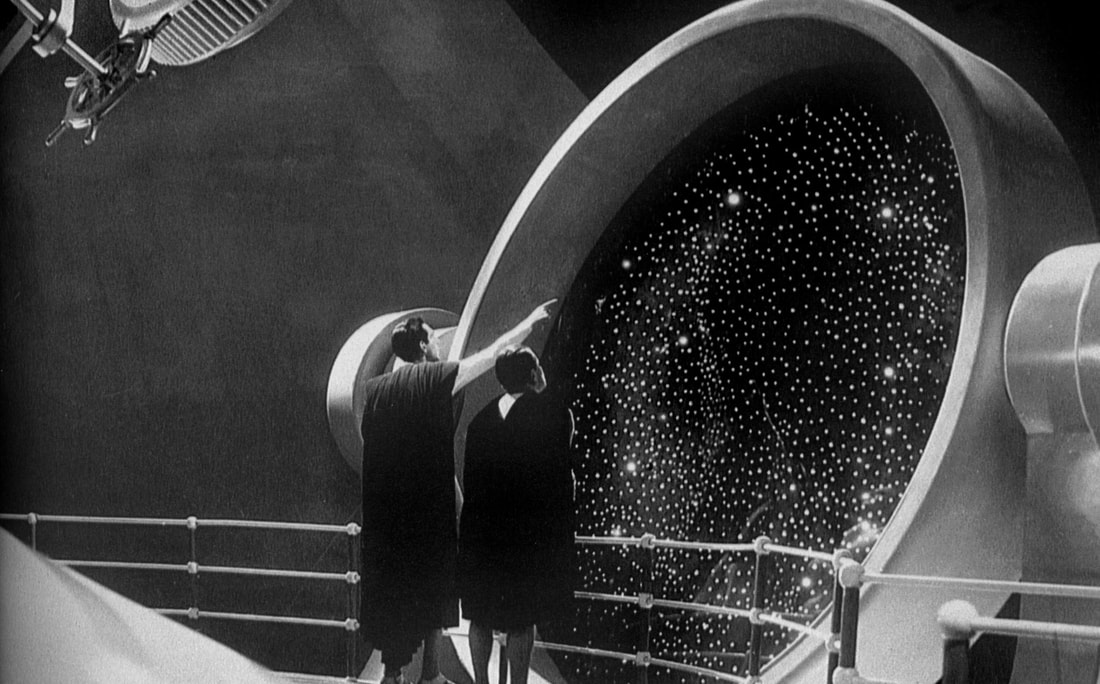

The Exploring Tomorrow podcast, by filmmaker, director and writer, Mikel J Wisler, has interviewed me on his wonderful, uber-nerdy show. It was a lovely chat, and Mikel was a warm and charming host. Super-nerdy writers of sci-fi and spec-fic may enjoy the conversation.

https://www.youtube.com/watch?v=yLJS6hgeFb0

0 Comments

Last year, in the middle of releasing a debut novel, mid-pandemic, with no marketing plan or budget, I blagged a surprise collaboration with Tasmanian playwright, Stephanie Briarwood, funded by TasWriters. The funding was part of a 'we'd better keep them alive and off the streets' strategy by government to avoid performers and other creatives from being seen in public, so, long-story-short, I co-wrote a kinda, sorta play - a speculative fiction narrative set in the near-future, in glorious down-town Burnie. Intended as a one-hour screenplay, Steph and I didn't know where the work would end up, so we were chuffed to be told Blue Cow were going to do a readthrough-performance - irl! Watching their performance, and skills in action, was wonderful. Stephanie, a seasoned professional, cheerfully mocked me as a 'theatre virgin' when I misted up, made unexpectedly emotional by the characters evolving through their narratives. So, a huge thank you to all involved. I'd love to see the actors encore in an actual filmed version of that one day in the life of an illegal taxi driver, Hong Kong refugee, Wendy Chen, and her growing family of passengers. Anyone need a super low-budget, locally made 100% Tasmanian drama script?

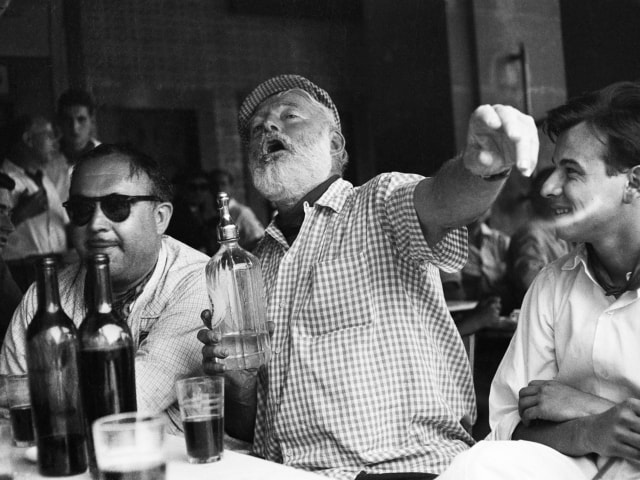

Obviously a mistake has been made. I've been invited to a writers' festival to be some kind of literary cage-fighter with an impressive group of real writers. Having never been to one of these things, I'm honestly not sure what to expect other than heavy drinking and arguments. I imagine it will start out reasonably civilised... ...and then descend into some kind of fierce competitive intellectualism in which the caged writers entertain the bellowing, half-naked crowds with things I'm unfamiliar with, like wit, and bon mots, and incisive insights into la condition humane. Etc. As each writer tries to top the others, and put them down in ever-cleverer ways, things are bound to turn nasty. My strategy will definitely be to get drunk quickly and throw the first punches. My rationale is that, sufficiently intoxicated, blows I fail to deflect will hurt less, and I'm sure to win audience plaudits and tossed coins by being ready to kick it all off. In any event, I've looked at the competition, and I'm pretty sure I can take down John Marsden, Clare Hooper and Bob Brown if I progress to the finals, but first I have sessions with Alex Landragin, Alison Croggon and Nike Sulway, then a 'conversation', presumably in some kind of bear-baiting pit, with Ramona Koval, then finishing the Saturday with an all-in mass-brawl with Koval (if she's still able to fight), Tony Birch, Mirandi Riwowe, and Rebecca Jessen. I hope to see some of you at the bar afterwards! Slainte!

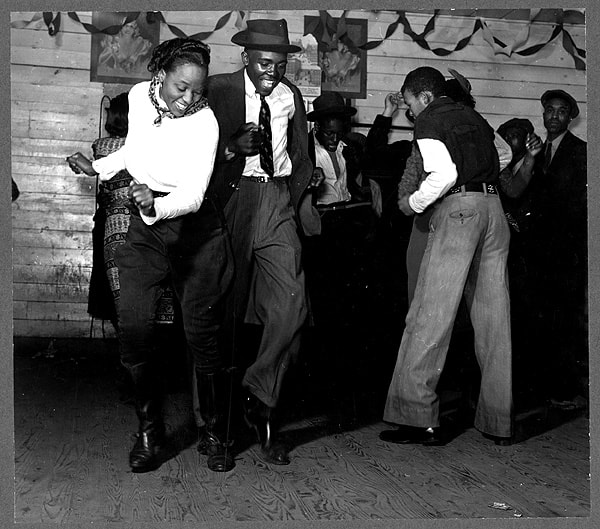

Wondering why humans aren't getting their shit together? Our biology is holding us back. In small tribal groups, most destructive thinking and behaviours are controlled, contained and corrected to that which will sustain the tribe. That’s how we’re hardwired. But on our globalised Earth, forms of meta-intelligence have emerged that overwhelm our individual and tribal intelligences. Organised religions, political ideologies and global corporations, each made up of myriads of functional humans, are working toward the unintended consequences of war, unstoppable climate crisis and mass species extinction. How are the ‘advanced’ future humans and aliens in your science fiction able to function cooperatively and sustainably long enough to evolve beyond behaviours that once served them well but became self-destructive? Individual and collective intelligence aside, how do you design a conscious creature who can achieve what we’re failing at—long-term survival. Here we have another problem. We don’t know what consciousness is or how it emerges—it’s still one of the great frontiers of science. When you drill down, even the term ‘consciousness’ becomes useless. Other than in the strict medical sense (the Glasgow Coma Scale), ‘consciousness’ isn’t even a thing. I, for example, don’t exist. Who is writing this if it isn’t a conscious incredibly nerdy being? If you now punch me on the arm, you may reasonably ask who is feeling the pain if it isn’t a conscious being—an ‘I’. But where are the words forming your question coming from? Is there a mini-you, sitting in your brain at a big meaty control station, working at lightning speed to collate thoughts, edit words and issue your response? Nope, it’s all just collectively emerging from specialised meat, linked by basic chemistry and physics. As we talk, we have no awareness of what’s happening in our brains to enable us to do so. Physiology and scans don’t tell us how our sense of self can appear certain when all indicators are that it’s merely a recursive representation—an avatar to assist the global brain function at ‘higher’, differently effective, and affective, levels. A dog is perfectly intelligent in its own way. So is slime mould, so is a young child—and they all need to distinguish ‘self’ from ‘other’. But they are conscious in a different, less complex way to us. The thing we call ‘consciousness’ is an evolving, ever-changing spectrum, in multiple dimensions. Worse, there’s a second way ‘you’ don’t exist. The ‘you’ reading this now, isn’t the same ‘you’ playing sports, or fuming in a traffic gridlock, or drinking in a bar, or making love. In effect, you’re multiple-personalities in a single body. In all your different emotional states—from incoherent-with-anger to kindly-altruistic—‘you’ are a bunch of very different people. Most of us are writing dystopian speculative fiction for good reasons, and lack of IQ in our leaders isn’t the issue. So, if you’ve designed your ‘superior’ homo or alien with a bigger brain, their ‘smarter’ intelligence isn’t a guarantee your new species will survive any longer than ours. We’re social mammals evolved to engage constructively with others and we do it spectacularly well. True, we’re only evolved to cope with a maximum of around 150 others in a tribal group rather than the entire interweb, but we can still operate collectively to build civilisations, tech, philosophies, the Rule of Law, and political parties—all of which require incredible individual and communal skillsets.

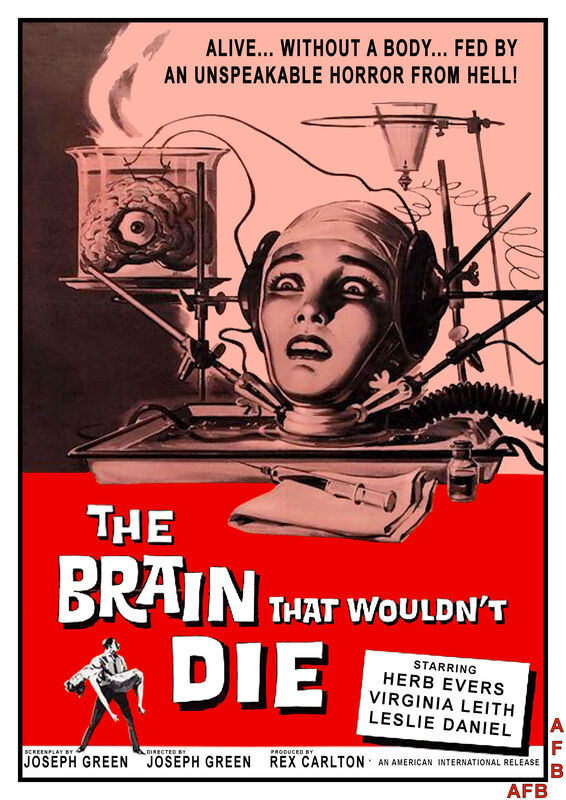

We’re also already hugely empathic as individuals and compassionate as collectives. Our intensely emotional brains motivate us to reason and problem-solve on every scale from the individual to the global. Yet those capabilities are not enough to stop us from committing civilisational suicide. It’s no longer enough to casually claim our fictional advanced creations are ‘wiser’ or ‘more empathic’ or ‘more compassionate’ or ‘more augmented’. We are already those things. So, when you create a new homo species, or our new alien overlords, what is the critical difference that has allowed them to transcend their biological evolution? How have they avoided collective suicide? What, in other words, could we do to save the most complex object in the known universe from destroying itself? Good luck. Okay, so there you are, wearing a lab coat, in a lab, brainy af, with billions of research dollars available to back up your idea to create an artificial intelligence (AI), which you hope will basically be better, smarter and wiser than us, and will save us from ourselves. Is it feasible? For all the hype about AI, and fears of exponentially self-evolving AI turning into a ‘Singularity’ that will rule us all, AI is just algorithms and algorithms do not a singularity make. They can build cars. They can hound and harass welfare recipients. Can they feel wistful during a poignant moment in a Vivaldi concerto? No. Just because you might be able to design an AI that passes a Turing Test, that doesn’t mean it can perform the most critical level of intelligence underpinning it all—emote. And in case you think emotions are a useless by-product of human reasoning, you’ve got it backwards. First, we care. Then we think about why we care. Then we act. Make your AI algorithms as recursive as you like, but you’ll never make it care. Okay, so how about renovating homo sapiens? Transhumanists are a diverse group, but they, like most sci-fi writers, tend to focus on physical and mental ‘augmentation’. But we are already massively augmented. Mentally, the phone in your hand augments your brain into the global consciousness. You’re also physically augmented. Need to connect two pieces of wood? Transform your entire body via a hammer and a nail. Need to travel distant places fast? Buy a plane ticket. Need to leap tall buildings? Take the elevator. Also, critically, transhumanism is inherently fascist and, with the best will in the world, missing the point. The idea of an ‘ideal’ human ignores the historical and biological reality that, in a group, diversity is strength—even if it looks like and sometimes is a weakness. The Third Reich wasn’t a failed plan but a Big Lie. So, when we writers think we’ve created a ‘superior’ or ‘advanced’ human species—or a truly novel alien species for that matter—we’ve merely transposed fascist, racist and colonial templates onto the future. Just as the English thought themselves superior to First Nations Australians, so our fictional future humans or aliens are largely versions of the Colonial Lie—which is that tech, or appearance (pale skin), or bigger guns, or culture, make us somehow ‘better’. But how are post-colonial humans ‘better’ if we’re not sustainable on a global scale? What’s stopping us being better? Biology.

|

Reviews & stuff

Archives

July 2022

Categories

|

RSS Feed

RSS Feed